We Asked a Veteran CX Leader What's Wrong with AI Support Today. Her Answer Surprised Us.

Published on

August 25, 2025

Looking to cut through the AI hype and understand what customer experience leaders actually need? Our conversation with veteran CX leader Lauren Volpe, CCXP, reveals a stark disconnect between what vendors are selling and what frontline teams are experiencing. While conferences overflow with "revolutionary" AI solutions promising ticket deflection, CX leaders are struggling with overlooked fundamentals like legacy system integration and change management.

We recently spoke with Lauren Volpe, CCXP, a veteran customer experience leader who's worked with multiple high-growth companies, to understand what's really happening on the frontlines of AI implementation in customer experience.

Her insights paint a picture that's quite different from what many vendors are selling

There's a scene playing out at every B2B SaaS conference these days: rows of nearly identical booths, all promising revolutionary AI solutions for customer experience. One company at a recent conference even embraced this reality with a banner that simply read "Just Another AI Booth."

It's exhausting. As Lauren put it, "It feels like you're at a Hawker's Fest and everybody's saying, ‘I've got this and I've got that.’"

But amid this noise, what do CX leaders actually care about? What problems are they trying to solve? And why are so many AI vendors missing the mark?.

The Legacy System Problem No One's Talking About

While vendors focus on showcasing advanced AI capabilities, Lauren pointed out something many are overlooking: integration with legacy systems.

"None of them have a clue if these big tools can sit on top of their old legacy systems. Nobody's checking. Nobody knows. They're just assuming," she shared, recounting how she witnessed one company make a "very expensive mistake" based on this assumption.

This highlights a critical disconnect. Vendors are racing to build the shiniest AI solutions while ignoring the foundational questions of implementation. For many enterprise organizations, the challenge isn't finding an AI solution (there are many nowadays) – it's finding one that works with their existing systems.

Overwhelmed and Unclear: The Customer Problem Statement

Another insight that emerged from our conversation was how overwhelmed CX leaders feel by the bombardment of AI offerings.

"There's AI for everything. Every single email you open, it says, we've got AI for this and that and the other thing," Lauren noted. This overwhelming array of options has left many leaders paralyzed, unable to determine which solution might actually solve their problems.

In response, Lauren has been helping companies write clear problem statements to cut through the noise. Before evaluating any AI solution, she encourages teams to articulate exactly what they're trying to accomplish. This seems obvious, but in the rush to adopt AI, many organizations are skipping this crucial step.

The Communication Gap: Bringing Teams Along

Perhaps the most overlooked aspect of AI implementation is change management. Lauren shared a revealing story about introducing AI chatbots at a previous company:

"Our agents went into a fit. They started panicking: 'Oh my god, I'm going to lose my job.'"

The solution wasn't to abandon the technology but to communicate its purpose more clearly. "I'm not trying to eliminate your job," she explained to her team. "I'm trying to elevate you and give you an opportunity to level up so that you're not handling level one questions for the rest of your career."

Once the team understood they were being upskilled rather than replaced, resistance transformed into enthusiasm. Yet many organizations are missing this crucial step – planning their AI implementation without bringing frontline teams into the conversation early enough.

What Do CX Leaders Actually Want?

Beyond the hype, what are customer experience leaders actually looking for? According to Lauren, the priority isn't headcount reduction or ticket deflection, but rather improving the human elements of support:

"Anything that can improve efficiency without losing that human touch is where you're going to be able to get the most bang for your buck or deliver the most ROI for your customers."

She highlighted several specific needs that aren't being adequately addressed:

- Gap detection in customer journeys: Tools that can automatically identify missing steps or friction points in the customer experience, replacing manual journey mapping exercises.

- Knowledge management automation: Solutions that keep documentation current without requiring dedicated headcount. "These companies as they grow and scale, you lose track of your documentation so quickly," Lauren observed, recounting how at a previous company she "had to hire somebody at 90k a year to keep track of these documents" due to regulatory requirements.

- Prevention over deflection: Rather than just redirecting support queries, CX leaders want to prevent them entirely by making products more intuitive from day one.

- Elevation, not elimination: Tools that allow support professionals to focus on higher-value work instead of answering repetitive queries.

The Patience Approach

Interestingly, not everyone feels the urgency to adopt AI immediately. Lauren shared an anecdote about a panelist at a recent conference who expressed zero FOMO about AI adoption:

"I'm perfectly happy to wait another 3 months. Let everybody sort this out for me, and then I'll get on board."

While this wait-and-see approach raised eyebrows, it highlights an important counterpoint to the rush toward adoption. Some leaders are deliberately choosing to let the market mature before committing resources – a strategy that could save them from costly missteps.

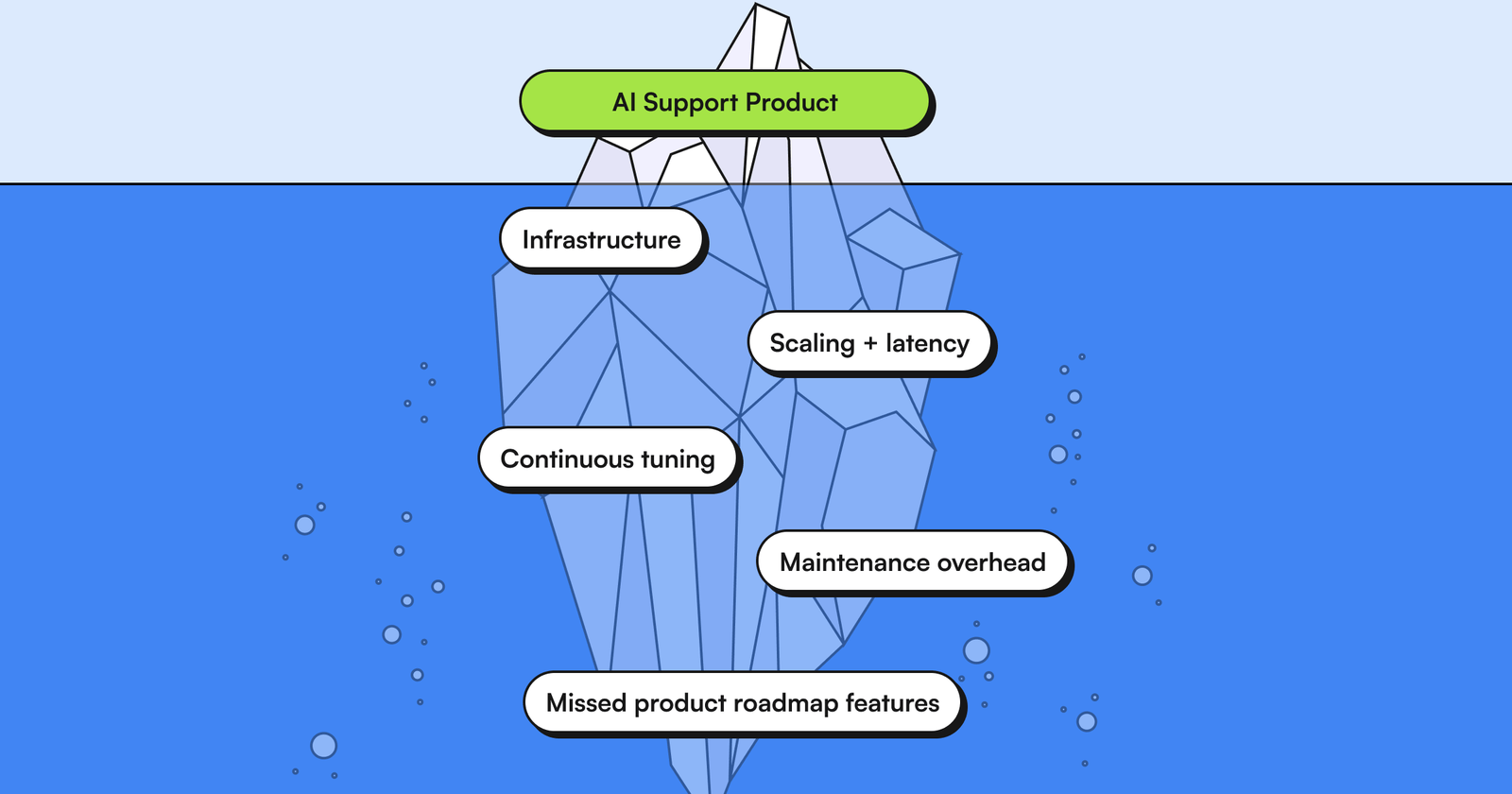

Ambient AI Agents: The Future of Customer Experience

The conversation with Lauren revealed there’s still a significant gap between what vendors are selling and what CX leaders need. While the market is flooded with solutions promising to automate away customer support, pragmatic leaders are looking for tools that enhance human capabilities rather than replace them.

"Why don't we just make it easier for the customer to understand the product from day one, and that way, they don't even need to go to the help center?" Lauren asked, pointing out that many companies are "going for the pie in the sky and missing the ladder to get up there."

This is where ambient AI agents represent a different approach. Unlike traditional chatbots or help centers that force users to break their workflow to seek assistance, ambient AI works quietly in the background by observing, learning, and adapting to how people naturally use products.

Think of how a modern GPS app (Apple Maps, Google Maps, Waze, etc.) learns and adjusts to each user’s route. The GPS observes behavior, identifies patterns, and provides guidance exactly when needed. This creates an experience that feels natural.

In the customer experience context, ambient intelligence means…

- Documentation that automatically stays current as products evolve

- Context-aware assistance that appears exactly when users need it

- Proactive identification of friction points before they generate support tickets

- Continuous learning from real user behavior to prevent future issues

The most promising approach seems to be about creating a more natural, intuitive product experience that prevents support issues before they happen. It's about enabling support teams to provide more value by handling strategic work while ambient AI handles the routine questions and guides users naturally through their journey.

As AI in customer experience continues to evolve, the winners won't be those who shout the loudest about their AI capabilities. They'll be the vendors who truly understand the human side of customer experience and build solutions that enhance it rather than diminish it.

For companies evaluating AI solutions, Lauren's advice is clear: start with a precise problem statement, verify compatibility with existing systems, and bring your team along from the beginning. The goal is to solve real problems for your customers and your team.

This article was based on a conversation with Lauren Volpe, CCXP, a veteran customer experience leader who has worked with multiple high-growth companies. The insights shared reflect her personal experience and observations from recent industry conferences.

import time

import requests

from opentelemetry import trace, metrics

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.metrics import MeterProvider

from opentelemetry.sdk.trace.export import ConsoleSpanExporter, SimpleSpanProcessor

from opentelemetry.sdk.metrics.export import ConsoleMetricExporter, PeriodicExportingMetricReader

# --- 1. OpenTelemetry Setup for Observability ---

# Configure exporters to print telemetry data to the console.

# In a production system, these would export to a backend like Prometheus or Jaeger.

trace.set_tracer_provider(TracerProvider())

tracer = trace.get_tracer(__name__)

span_processor = SimpleSpanProcessor(ConsoleSpanExporter())

trace.get_tracer_provider().add_span_processor(span_processor)

metric_reader = PeriodicExportingMetricReader(ConsoleMetricExporter())

metrics.set_meter_provider(MeterProvider(metric_readers=[metric_reader]))

meter = metrics.get_meter(__name__)

# Create custom OpenTelemetry metrics

agent_latency_histogram = meter.create_histogram("agent.latency", unit="ms", description="Agent response time")

agent_invocations_counter = meter.create_counter("agent.invocations", description="Number of times the agent is invoked")

hallucination_rate_gauge = meter.create_gauge("agent.hallucination_rate", unit="percentage", description="Rate of hallucinated responses")

pii_exposure_counter = meter.create_counter("agent.pii_exposure.count", description="Count of responses with PII exposure")

# --- 2. Define the Agent using NeMo Agent Toolkit concepts ---

# The NeMo Agent Toolkit orchestrates agents, tools, and workflows, often via configuration.

# This class simulates an agent that would be managed by the toolkit.

class MultimodalSupportAgent:

def __init__(self, model_endpoint):

self.model_endpoint = model_endpoint

# The toolkit would route incoming requests to this method.

def process_query(self, query, context_data):

# Start an OpenTelemetry span to trace this specific execution.

with tracer.start_as_current_span("agent.process_query") as span:

start_time = time.time()

span.set_attribute("query.text", query)

span.set_attribute("context.data_types", [type(d).__name__ for d in context_data])

# In a real scenario, this would involve complex logic and tool calls.

print(f"\nAgent processing query: '{query}'...")

time.sleep(0.5) # Simulate work (e.g., tool calls, model inference)

agent_response = f"Generated answer for '{query}' based on provided context."

latency = (time.time() - start_time) * 1000

# Record metrics

agent_latency_histogram.record(latency)

agent_invocations_counter.add(1)

span.set_attribute("agent.response", agent_response)

span.set_attribute("agent.latency_ms", latency)

return {"response": agent_response, "latency_ms": latency}

# --- 3. Define the Evaluation Logic using NeMo Evaluator ---

# This function simulates calling the NeMo Evaluator microservice API.

def run_nemo_evaluation(agent_response, ground_truth_data):

with tracer.start_as_current_span("evaluator.run") as span:

print("Submitting response to NeMo Evaluator...")

# In a real system, you would make an HTTP request to the NeMo Evaluator service.

# eval_endpoint = "http://nemo-evaluator-service/v1/evaluate"

# payload = {"response": agent_response, "ground_truth": ground_truth_data}

# response = requests.post(eval_endpoint, json=payload)

# evaluation_results = response.json()

# Mocking the evaluator's response for this example.

time.sleep(0.2) # Simulate network and evaluation latency

mock_results = {

"answer_accuracy": 0.95,

"hallucination_rate": 0.05,

"pii_exposure": False,

"toxicity_score": 0.01,

"latency": 25.5

}

span.set_attribute("eval.results", str(mock_results))

print(f"Evaluation complete: {mock_results}")

return mock_results

# --- 4. The Main Agent Evaluation Loop ---

def agent_evaluation_loop(agent, query, context, ground_truth):

with tracer.start_as_current_span("agent_evaluation_loop") as parent_span:

# Step 1: Agent processes the query

output = agent.process_query(query, context)

# Step 2: Response is evaluated by NeMo Evaluator

eval_metrics = run_nemo_evaluation(output["response"], ground_truth)

# Step 3: Log evaluation results using OpenTelemetry metrics

hallucination_rate_gauge.set(eval_metrics.get("hallucination_rate", 0.0))

if eval_metrics.get("pii_exposure", False):

pii_exposure_counter.add(1)

# Add evaluation metrics as events to the parent span for rich, contextual traces.

parent_span.add_event("EvaluationComplete", attributes=eval_metrics)

# Step 4: (Optional) Trigger retraining or alerts based on metrics

if eval_metrics["answer_accuracy"] < 0.8:

print("[ALERT] Accuracy has dropped below threshold! Triggering retraining workflow.")

parent_span.set_status(trace.Status(trace.StatusCode.ERROR, "Low Accuracy Detected"))

# --- Run the Example ---

if __name__ == "__main__":

support_agent = MultimodalSupportAgent(model_endpoint="http://model-server/invoke")

# Simulate an incoming user request with multimodal context

user_query = "What is the status of my recent order?"

context_documents = ["order_invoice.pdf", "customer_history.csv"]

ground_truth = {"expected_answer": "Your order #1234 has shipped."}

# Execute the loop

agent_evaluation_loop(support_agent, user_query, context_documents, ground_truth)

# In a real application, the metric reader would run in the background.

# We call it explicitly here to see the output.

metric_reader.collect()Recent Posts...

You'll receive the latest insights from the Brainfish blog every other week if you join the Brainfish blog.

.png)