Compliance-Grade AI: How High-Governance Teams Pilot Without Risk

Published on

October 22, 2025

If you’ve ever watched a two-week AI pilot take three months to approve, you’re not alone. For high-governance teams, innovation doesn’t fail from lack of interest (because AI is interesting!). It fails from lack of proof. Discover how CX leaders are launching “zero-blast-radius” pilots that win over legal, security, and IT while unlocking measurable ROI with Brainfish’s compliance-grade AI.

For many enterprise CX and Support leaders, adopting AI feels like standing at the edge of innovation. Which means you’re probably held back by governance. We’ve heard it all:

“We can’t even start a pilot until legal approves data residency.”

“Our compliance team needs proof we can turn it off instantly.”

“We’d need SOC 2 and clear data isolation to move forward.”

“We’re governed like a bank even though we’re a tech company.”

You’ve seen what’s possible with AI. Faster answers, real-time updates, intelligent self-service. But in your world, every experiment is another spreadsheet of compliance checks, SOC 2 reports, and legal reviews that take longer than the pilot itself.

At one global healthcare provider, a support leader summed it up perfectly:

“The AI pilot took three months to approve, two weeks to run, and one afternoon to prove its value.”

That’s the paradox of high-governance innovation: teams know there’s value, but can’t afford to take the wrong step with this stuff.

This is where compliance-grade AI comes in: tools and frameworks designed not to evade oversight, but to embrace it. Here’s how modern CX teams are running successful AI pilots without creating risk.

The Hidden Friction of AI Pilots

Every vendor promises “five-minute setup.” But for regulated organizations like healthcare, finance, education, government, setup isn’t necessarily the hard part. Approval is.

Before the first API call, governance teams will ask things like:

- Where does the data live?

- Can we audit model outputs?

- What if AI surfaces sensitive or outdated information?

These are valid questions. According to Gartner’s 2024 Hype Cycle for Generative AI, 80% of enterprises plan to establish formal AI risk frameworks by 2026.

But most pilots fail before those frameworks even exist.

The good news is you don’t need to choose between innovation and compliance! You just need a pilot built to pass scrutiny. (Ahem, that’s Brainfish.)

Step 1: Contain the Blast Radius

Start small, on purpose.

We’re seen teams run zero-blast-radius pilots: tightly scoped, low-risk, and fully reversible. The goal isn’t speed to scale but rather speed to certainty. You already know that, though. Just thought it was worth mentioning.

Here’s what that looks like:

- Limited Scope: Test within one dataset or a single help center category (e.g., FAQs or product documentation).

- Non-Production Data: Use sanitized or anonymized sources.

- Instant Kill Switch: Maintain the ability to disable the system immediately if necessary.

When risk is contained, approvals move faster. And when approvals move faster, innovation finally has air to breathe. Whew.

Step 2: Make Security a Feature

When your governance and IT teams can see how an AI system works, they stop blocking and start championing.

At Brainfish, security is trust in product form.

- SOC 2 Type II certified.

- Data encryption at rest and in transit using AES-256.

- Regional data residency (US, EU, APAC) for compliance with GDPR and local data sovereignty laws.

- Zero customer data used to train global models.

- Clear retention and deletion policies for all content and query logs.

And for teams calculating potential breach exposure, they matter: the IBM 2024 Cost of a Data Breach Report found that the average regulated enterprise faces $5.04 million in breach costs - nearly 30% higher than unregulated peers.

Security is kind of like ROI protection.

Brainfish’s “Compliance by Design” Framework

Isolation: Customer data never leaves regional boundaries.

Residency: Content stays in-country with full export control.

Traceability: Every AI-generated answer includes inline citations to its original source.

Revocability: Admins can instantly purge, disable, or audit data pipelines.

Auditability: All actions, queries, and outputs are logged and exportable for compliance review.

That’s what turns Brainfish from an AI tool into an audit-ready platform.

Step 3: Pair AI Autonomy with Human Oversight

The fastest way to lose trust in AI is to make it untraceable.

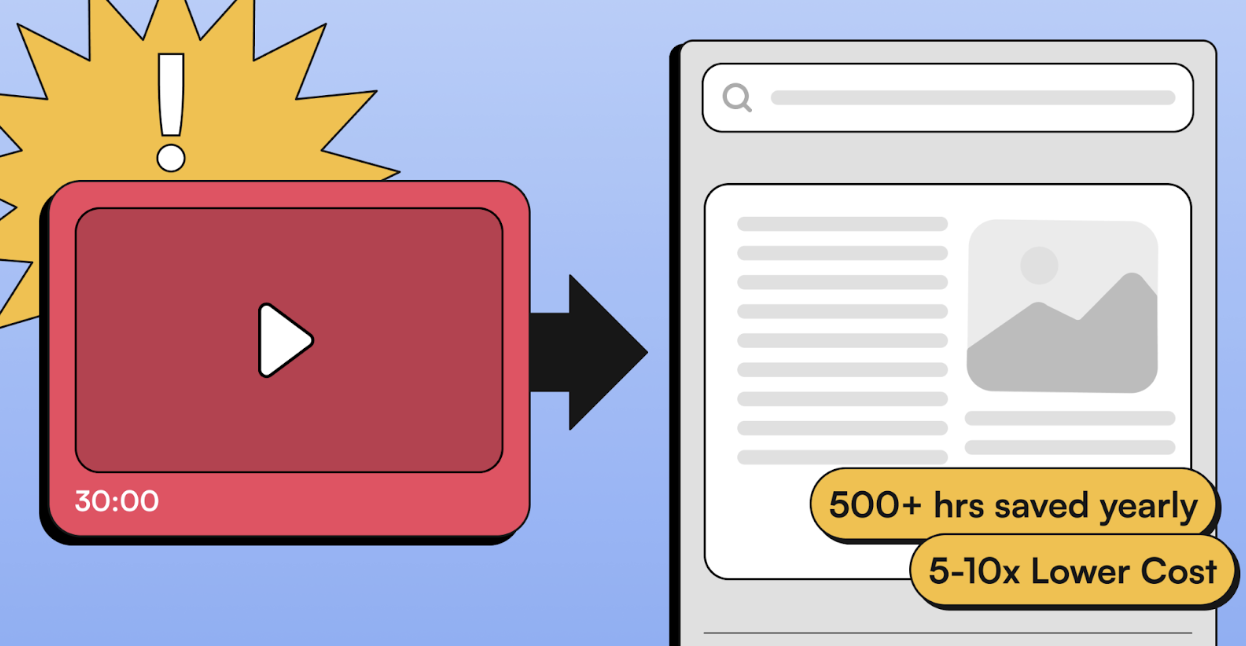

Brainfish solves this by keeping every AI response transparent. Each answer links directly to the exact document, timestamp, or video segment it came from.

That means every insight can be verified, every mistake corrected, and every action audited.

As HBR notes in The AI Transparency Paradox, transparency in AI systems helps mitigate distrust by exposing decision paths and data usage.

This is human-in-the-loop intelligence at work: the AI moves fast, but the humans stay in control.

And it’s not just theoretical - see how Smokeball reduced search-to-ticket ratio by 74% while maintaining strict compliance and auditability.

Step 4: Document the Documentation

In high-governance environments, a successful pilot isn’t enough because you need to prove it was successful safely.

That’s why every Brainfish pilot ends with a “Compliance Readiness Summary”, a one-page internal artifact detailing:

- Data flow map: what data moved where, and when.

- Access summary: who could see or export what.

- Security checklist: encryption, SOC 2, and data residency confirmations.

- Pilot results: self-service rate, accuracy, and time saved.

This summary becomes your ticket to expand the pilot across regions or product lines.

Step 5: Measure Value and Safety Side by Side

Most AI metrics focus on productivity - tickets deflected, hours saved, CSAT scores.

But in a compliance-first pilot, safety is a KPI too.

The most forward-thinking CX leaders track both:

Value MetricsSafety MetricsSelf-service success rateZero data incidentsTime-to-answerAudit log completenessTicket reductionCompliance SLA adherenceContent freshnessGovernance approval cycle time

This dual lens reflects what PwC’s Responsible AI Framework calls “balanced accountability” - ensuring AI creates measurable business value while staying verifiably safe.

Step 6: Turn Governance into a Growth Advantage

Brainfish’s regional data controls, inline audit logs, and human-verifiable output have made it a go-to for regulated organizations across industries.

Because when you design for trust, expansion follows naturally.

Ambient AI support agents (explore the product) can then safely operate in-production, backed by content that continuously self-updates (auto-updating docs) and analytics that prove performance (customer analytics dashboard).

The Future of Safe Innovation

The next era of AI won’t be defined by how fast teams deploy, but by how responsibly they do it.

AI systems that observe, learn, and act in real time - what Brainfish calls ambient AI - will only thrive when they can prove reliability to every stakeholder: Support, Product, Legal, and Security.

That’s why Brainfish embeds governance into every workflow: SOC 2 by default, residency by region, human verification for every answer, and full visibility for compliance.

“AI adoption fails because the process isn’t considered safe.”

Fix the process, and innovation finally gets to scale.

The Governance Champion’s Advantage

Every successful AI rollout inside a regulated organization starts with one person:

The Governance Champion, who’s the bridge between innovation and oversight.

Brainfish gives them the tools, audit trails, and evidence they need to say “yes” (or even “hell yes”) with confidence.

Takeaway

By containing risk, designing for auditability, and measuring trust alongside ROI, CX and Support leaders can modernize without compromise.

AI that passes compliance isn’t luck!

import time

import requests

from opentelemetry import trace, metrics

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.metrics import MeterProvider

from opentelemetry.sdk.trace.export import ConsoleSpanExporter, SimpleSpanProcessor

from opentelemetry.sdk.metrics.export import ConsoleMetricExporter, PeriodicExportingMetricReader

# --- 1. OpenTelemetry Setup for Observability ---

# Configure exporters to print telemetry data to the console.

# In a production system, these would export to a backend like Prometheus or Jaeger.

trace.set_tracer_provider(TracerProvider())

tracer = trace.get_tracer(__name__)

span_processor = SimpleSpanProcessor(ConsoleSpanExporter())

trace.get_tracer_provider().add_span_processor(span_processor)

metric_reader = PeriodicExportingMetricReader(ConsoleMetricExporter())

metrics.set_meter_provider(MeterProvider(metric_readers=[metric_reader]))

meter = metrics.get_meter(__name__)

# Create custom OpenTelemetry metrics

agent_latency_histogram = meter.create_histogram("agent.latency", unit="ms", description="Agent response time")

agent_invocations_counter = meter.create_counter("agent.invocations", description="Number of times the agent is invoked")

hallucination_rate_gauge = meter.create_gauge("agent.hallucination_rate", unit="percentage", description="Rate of hallucinated responses")

pii_exposure_counter = meter.create_counter("agent.pii_exposure.count", description="Count of responses with PII exposure")

# --- 2. Define the Agent using NeMo Agent Toolkit concepts ---

# The NeMo Agent Toolkit orchestrates agents, tools, and workflows, often via configuration.

# This class simulates an agent that would be managed by the toolkit.

class MultimodalSupportAgent:

def __init__(self, model_endpoint):

self.model_endpoint = model_endpoint

# The toolkit would route incoming requests to this method.

def process_query(self, query, context_data):

# Start an OpenTelemetry span to trace this specific execution.

with tracer.start_as_current_span("agent.process_query") as span:

start_time = time.time()

span.set_attribute("query.text", query)

span.set_attribute("context.data_types", [type(d).__name__ for d in context_data])

# In a real scenario, this would involve complex logic and tool calls.

print(f"\nAgent processing query: '{query}'...")

time.sleep(0.5) # Simulate work (e.g., tool calls, model inference)

agent_response = f"Generated answer for '{query}' based on provided context."

latency = (time.time() - start_time) * 1000

# Record metrics

agent_latency_histogram.record(latency)

agent_invocations_counter.add(1)

span.set_attribute("agent.response", agent_response)

span.set_attribute("agent.latency_ms", latency)

return {"response": agent_response, "latency_ms": latency}

# --- 3. Define the Evaluation Logic using NeMo Evaluator ---

# This function simulates calling the NeMo Evaluator microservice API.

def run_nemo_evaluation(agent_response, ground_truth_data):

with tracer.start_as_current_span("evaluator.run") as span:

print("Submitting response to NeMo Evaluator...")

# In a real system, you would make an HTTP request to the NeMo Evaluator service.

# eval_endpoint = "http://nemo-evaluator-service/v1/evaluate"

# payload = {"response": agent_response, "ground_truth": ground_truth_data}

# response = requests.post(eval_endpoint, json=payload)

# evaluation_results = response.json()

# Mocking the evaluator's response for this example.

time.sleep(0.2) # Simulate network and evaluation latency

mock_results = {

"answer_accuracy": 0.95,

"hallucination_rate": 0.05,

"pii_exposure": False,

"toxicity_score": 0.01,

"latency": 25.5

}

span.set_attribute("eval.results", str(mock_results))

print(f"Evaluation complete: {mock_results}")

return mock_results

# --- 4. The Main Agent Evaluation Loop ---

def agent_evaluation_loop(agent, query, context, ground_truth):

with tracer.start_as_current_span("agent_evaluation_loop") as parent_span:

# Step 1: Agent processes the query

output = agent.process_query(query, context)

# Step 2: Response is evaluated by NeMo Evaluator

eval_metrics = run_nemo_evaluation(output["response"], ground_truth)

# Step 3: Log evaluation results using OpenTelemetry metrics

hallucination_rate_gauge.set(eval_metrics.get("hallucination_rate", 0.0))

if eval_metrics.get("pii_exposure", False):

pii_exposure_counter.add(1)

# Add evaluation metrics as events to the parent span for rich, contextual traces.

parent_span.add_event("EvaluationComplete", attributes=eval_metrics)

# Step 4: (Optional) Trigger retraining or alerts based on metrics

if eval_metrics["answer_accuracy"] < 0.8:

print("[ALERT] Accuracy has dropped below threshold! Triggering retraining workflow.")

parent_span.set_status(trace.Status(trace.StatusCode.ERROR, "Low Accuracy Detected"))

# --- Run the Example ---

if __name__ == "__main__":

support_agent = MultimodalSupportAgent(model_endpoint="http://model-server/invoke")

# Simulate an incoming user request with multimodal context

user_query = "What is the status of my recent order?"

context_documents = ["order_invoice.pdf", "customer_history.csv"]

ground_truth = {"expected_answer": "Your order #1234 has shipped."}

# Execute the loop

agent_evaluation_loop(support_agent, user_query, context_documents, ground_truth)

# In a real application, the metric reader would run in the background.

# We call it explicitly here to see the output.

metric_reader.collect()Recent Posts...

You'll receive the latest insights from the Brainfish blog every other week if you join the Brainfish blog.